Research Deepfakes & Misinformation

Summary

This writing assignment constitutes the seventh of eight creative challenges that undergraduate students complete for Writing with Artificial Intelligence, an undergraduate writing course. This challenges introduces students to research and scholarship on deepfakes, their impact on society, and the importance of understanding and combating misinformation. Students conduct textual research on how AI-generated deepfakes exploit cognitive biases, social dynamics, and information ecosystems. Students critically analyze misinformation, write an annotated bibliography, and create a deepfake or information campaign.

In today’s knowledge economy, being able to identify deepfakes and other forms of misinformation is a critical AI competency, a basic literacy. Not surprisingly, the MLA CCCC Taskforce on Writing and AI has argued teaching critical AI literacy is crucial for our students and democracy.

Introduction

In the first creative challenge for this course — Key Benefits of Writing Without AI for Students — you explored the benefits of writing without AI. Then, for the second challenge you created an infographic on prompt engineering. You followed that challenge by learning about bots and designing a custom chatbot. Then, for creative challenge #4, you researched critical AI literacies that provide an intellectual framework to deconstruct media articles that “hype” artificial intelligence. In the fifth challenge, you surveyed the evolution of copyright laws and Lessig’s argument for “open copyright,” and then you wrote an analysis weighing if potential copyright violations from training AI models are acceptable trade-offs for their societal benefits. Lastly, in creative challenge #6, you engaged in a qualitative, empirical research experiment to assess how GAI tools may enhance/constrain your creative and cognitive processes and agency as communicators.

Now, for this creative challenge, you will revisit a primary course theme — critical AI literacies. This challenge builds on the readings of the first and third creative challenges — AI Snake Oil’s The “18 Pitfalls in AI Journalism,” Postman’s “Five things we need to know about technological change,” and the Civics of Technology’s “Technoskeptical Framework.” It asks you to research the architecture of deep fakes and misinformation and then to craft your own deepfake or misinformation campaign.

What are Deep Fakes and Why Do They Matter?

AI tools can make it difficult to distinguish fact from fiction.

Deepfakes are hyper-realistic but entirely fabricated audio, video, and images created using advanced AI technologies. These tools, often leveraging deep learning algorithms, can superimpose faces, alter voices, and create convincing digital fabrications that are nearly indistinguishable from authentic media. The proliferation of deepfakes has raised significant concerns about their impact on trust, democracy, and social stability.

One of the most notable platforms where deep fakes have made an impact is Facebook. With its vast user base and influential reach, Facebook has become a fertile ground for the spread of misinformation and deep fakes. Critics argue that deep fakes on social media platforms like Facebook can undermine democratic processes by spreading false information and manipulating public opinion. For instance, a deep fake video emerged in 2019 featuring House Speaker Nancy Pelosi. The video was manipulated to make Pelosi appear as though she was slurring her words and struggling to speak coherently during a speech. Although it was quickly debunked, the altered video went viral, being shared millions of times across various platforms. This incident highlighted how deep fakes can be used to discredit public figures and distort political discourse.

During the 2020 U.S. Presidential election, a deepfake video falsely depicted Joe Biden making inflammatory and racist remarks. The video was created using advanced AI technologies to superimpose Biden’s face onto another person’s body and manipulate his voice to say things he never actually said. In the video, Biden was made to appear as though he was endorsing white supremacist ideologies and expressing disdain for minority communities. This video was designed to discredit Biden, mislead voters, and stir social and political unrest. Although the video was quickly debunked by fact-checkers, it had already been viewed and shared by millions, highlighting the potent impact of deep fakes in spreading false information and manipulating public opinion.

What is False Information?

False information, or misinformation, includes any content that is intentionally or unintentionally false, misleading, or deceptive. This can range from completely fabricated news stories to manipulated images and videos designed to mislead viewers. Like deepfakes, the spread of false information can undermine democratic processes, erode public trust, and create social and political instability.

A notable example of misinformation is the Russian interference in the 2016 U.S. Presidential election. Russian operatives created false personas and spread misinformation through social media platforms to influence public opinion and disrupt the electoral process. The Internet Research Agency (IRA), a Russian troll farm, orchestrated a sophisticated campaign that involved creating fake social media profiles, spreading divisive content, and amplifying political discord. They targeted American voters with ads and posts designed to exploit existing social and political tensions, sowing confusion and mistrust.

The IRA’s campaign included false narratives about Hillary Clinton. These narratives involved fabricated emails, fake news articles, and social media posts that painted her as corrupt, dishonest, and involved in various illegal activities. For example, one widely circulated story falsely claimed that Clinton was involved in a child trafficking ring run out of a pizzeria in Washington, D.C., a conspiracy theory known as “Pizzagate.” Although completely baseless, this story gained significant traction, leading to real-world consequences, including a shooting incident at the pizzeria. The Russian misinformation campaign also included efforts to support Donald Trump, whom they perceived as more favorable to Russian interests. The IRA spread content that highlighted Trump’s strengths, amplified his campaign messages, and attacked his opponents. This manipulation of information was intended to sow discord among the American electorate, weaken trust in democratic institutions, and influence the outcome of the election.

During the 2022 Russian invasion of Ukraine, a deep fake video emerged depicting Ukrainian President Volodymyr Zelenskyy appearing to tell Ukrainian soldiers to surrender and lay down their arms. The video was created using AI to superimpose Zelenskyy’s face onto another person’s body and manipulate his voice. Although quickly debunked, this deep fake demonstrated how such technology could be weaponized to spread misinformation and undermine a nation’s resolve during wartime.

Former U.S. President Donald Trump has also been a target and potential source of deep fakes and misinformation. In 2020, a deep fake video surfaced appearing to show Trump calling to have the Republican Party’s leaders arrested. The video was proven to be manipulated using an AI voice cloning tool. Trump himself has also repeatedly shared misinformation, such as falsely claiming the 2020 election was “rigged” due to widespread voter fraud, despite a lack of credible evidence. He continued to push this false narrative even after his recent criminal trial, alleging without proof that the judicial process was also “rigged” against him.

What is a False Information Campaign?

A false information campaign is a coordinated effort to spread misleading or deceptive content to achieve specific objectives, such as influencing public opinion, discrediting opponents, or disrupting societal stability. These campaigns often involve multiple tactics, including creating fake social media accounts, using bots to amplify messages, and leveraging targeted advertising to reach specific audiences.

One of the most infamous false information campaigns involved Cambridge Analytica during the 2016 U.S. Presidential election. Cambridge Analytica, a political consulting firm, harvested data from millions of Facebook users without their consent. This data was then used to create detailed psychological profiles of voters, allowing for highly targeted political advertisements designed to play on voters’ fears, anxieties, and biases tp manipulate public opinion. The Cambridge Analytica scandal highlighted the dangers of data misuse and the ethical implications of using personal information to manipulate democratic processes.

Why Do People Engage in or Believe Deep Fakes and Misinformation?

People believe in misinformation and deep fakes for a number of psychological, social, technological reasons. They may not be thinking critically about what they see or read. Cognitive biases like confirmation bias, where people accept information that fits their pre-existing beliefs, play a major role. Social dynamics like online echo chambers and endorsement from peers in one’s network also enable misinformation to spread widely before being fact-checked. On a psychological level, our pattern-seeking brains are hard-wired to latch onto narratives that fit our pre-existing worldviews and beliefs. This bias is turbocharged by tribalism, where challenging someone’s beliefs is akin to attacking their very identity and social allegiances. Moreover, our brains are extremely susceptible to fear-mongering narratives that portray the world as a scary, unpredictable place needing simplistic explanations. Deepfakes and misinformation seamlessly tap into these existential anxieties, providing a compelling shield against the unsettling complexities of reality.

Repetition also plays a pivotal role in what information we ultimately accept as true. Encountering the same falsehoods repeatedly, whether through online echo chambers or malicious amplification by bots/trolls, can quite literally rewire our neural pathways to internalize misinformation over time.

On a social level, the perceived endorsement of falsehoods from our peers and trusted communities has an outsized impact. Emotional, provocative content spreads like wildfire through our digital networks, overriding objective analyses before fact-checks can catch up. These insular echo chambers insulate us from contradictory evidence.

Technologically, while deep fake capabilities have advanced, there has been a lack of sufficient legislation and platform accountability to curb misinformation’s reach. Major social media companies have often prioritized engagement metrics and advertising revenue over aggressively combating fake news and fabricated media hosted on their platforms. Bad actors have recognized the incredible bang for their buck in weaponizing deepfakes and misleading propaganda. Whether nation-states sowing sociopolitical chaos among rivals, corporations inflaming product hysteria, or partisan operatives manufacturing outrage, the return on investment is tantalizing. Credible-looking falsities can be generated cheaply and disseminated to massive audiences.

In essence, human evolutionary blind spots around confirmation bias and repetitive familiarity, coupled with our existential fears, social vulnerabilities, and the viral dynamics of our digital age, have created the perfect breeding ground for deepfakes and misinformation to thrive.

In light of these risks, the MLA-CCCC Joint Task Force emphasizes the urgency of developing critical AI literacies to counteract the ways deepfakes and disinformation campaigns exploit our psychological blind spots. Beyond just technical understanding of AI models, this literacy involves cultivating awareness of how they enable the cheap generation and mass dissemination of compelling-yet-misleading narratives that confirm our biases, soothe our existential anxieties, and spread rapidly through trusted social circles before fact-checking can intervene.

Writing Prompt

This is a two-part assignment:

- Create a deepfake or misinformation campaign

- Report on your deepfake/misinformation campaign. Report on not only what you did but how you appealed to confirmation bias, tribalism, information ecosystems, and emotional appeals

Schedule

| Meeting | Due Dates & Topics | Assignments/Activities |

| 1 | Research Review – DeepfakesDue: Social Annotation | 1. Watch Reid Hoffman meets his AI twin – Full 2. Using Perusal, complete social annotations of Creative Challenge #7 3. Review of Annotated Bibliography format 4. Review of how to access university’s databases 5. Work individually to work on Part of 1 of Creative Challenge #7 |

| Homework | 1. Complete Step 1 for Creative Challenge #7 – i.e., assigned readings/videos 2. Begin work on Part 2 — writing an Annotated Bibliography on articles that address the question “How are malicious actors and sophists exploiting AI to spread misinformation and sow social discord?” | |

| 2 | Writing Workshop/Peer collaboration | 1. In class finalize your Annotated Bibliography and microreflection 2.Time permitting, share your annotated bibliographies in class |

| Homework | 1. Finalize your annotated bibliography 2. Research tools for creating deep fakes or research misinformation campaigns — extended, real-world efforts at deep fakes (e.g., the analytica scandal) 3. Create a deep fake or draft of a campaign for a misinformation campaign | |

| 3 | Collaborative Work | Share the deepfakes you found that were most interesting. Share your efforts to create a deepfake or information campaign. Share the tools you’re experimenting with. |

| Homework | Complete a substantive draft of your article reporting not only what you did (i.e., your deepfake/information campaign) but how you appealed to confirmation bias, tribalism, information ecosystems, and emotional appeals | |

| 4 | Structured Revision – How to Revise Your Work | In class work on Structured Revision |

| Homework | Project Due – Creative Challenge #7 | Follow the submission instructions for the 3 deliverables that are outlined at Creative Challenge #7 |

Step 1

- Investigate deepfakes, misinformation campaigns, and scholarly analysis on why people believe and engage with misinformation. Explore the psychological, social, technological, and financial factors that enable the spread of fabricated content like deepfakes. Focus your analysis on this question, “How are malicious actors and sophists exploiting AI to spread misinformation and sow social discord?” Check out articles on “deepfakes” at The Atlantic (login for free access). Scrutinize pieces on Deepfakes at Medium as well. Utilize Google Scholar or university databases for deeper research. In your response, incorporate screen captures or hyperlinks exemplifying appeals to confirmation bias, tribalism, ethos–and so on. Examine how these deepfakes and misinformation emerge from online echo chambers and information silos. More specifically, your research may cover one or two of the following topics:

- Cognitive biases like confirmation bias and tribalism

- Social dynamics of online networks, echo chambers, and peer endorsement

- Lack of regulation and profit motives of tech platforms

- Financial incentives for disinformation by nations, businesses, and partisan groups

- Other key factors like desire for certainty, vulnerability to compelling narratives, conspiratorial thinking

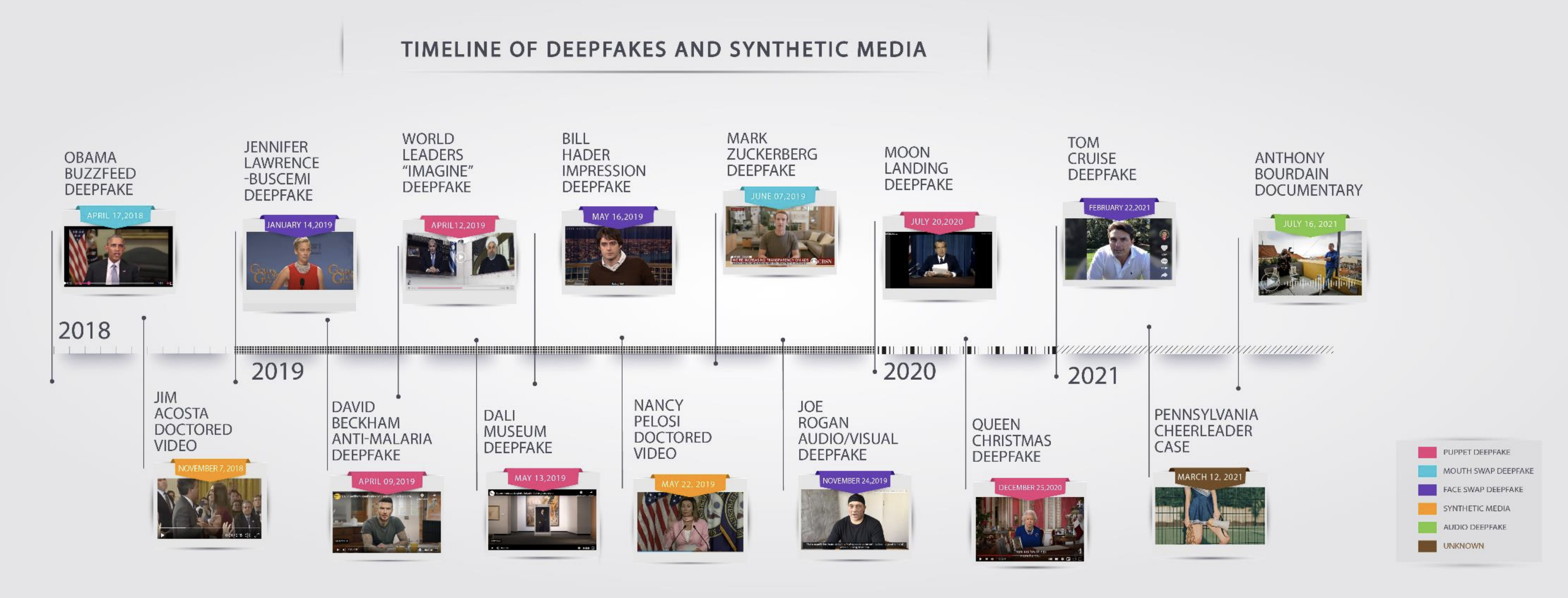

- Uncover 1-2 examples of compelling or influential deepfakes that pique your interest or provide insight. Be prepared to showcase and analyze these examples.

Step 2 – Annotated Bibliography

- Use Google Scholar or USF’s Databases to develop an annotated bibliography of at least three sources that you can use to respond to the question, “How are malicious actors and sophists exploiting AI to spread misinformation and sow social discord?”

- Next, leverage a GAI tool to generate a second annotated bibliography. (Recommended: Perplexity.ai) Critically evaluate all sources provided by the AI tool, reading them to ensure accurate representation. Here I encourage you to use different sources than the ones you found.

- Compare the two annotated bibliographies – your own, and the AI’s. Be sure to check that the sources references by the AI tool are real and that they are accurately represented. Select the three highest-quality annotations and revise/edit as necessary.

- At the end of the annotated bibliography, write a 100 to 200-word reflection comparing your annotations to those generated by GAI tools. Analyze the AI’s performance – did it fabricate sources? Accurately summarize articles? Discuss insights gained on writing annotated bibliographies through this exercise.

Step 3 – In-Class Group Discussion

Meet in small groups to share and discuss:

- Key findings from your research on why people believe deepfakes and misinformation

- The deepfake examples you uncovered and your analysis of their significance/impact

Engage in a dialogue exploring the societal implications of deepfakes and misinformation proliferated through phenomena like confirmation bias, online echo chambers, and financial incentive structures. Discuss potential solutions from technological, regulatory, educational, and individual perspectives.

Step 4 – Create a Deepfake or Misinformation Campaign Plan

- Research AI tools enabling deepfake creation or strategize ways to develop a misinformation campaign

- For this creative challenge, using Adobe Express to swap faces in an image is acceptable. However, I encourage experimenting with AI tools specifically designed for deepfake generation.

Step 5 – Collaborative Peer Review

- Engage in peer review, sharing your deepfake experiments

Step 6 – Deliverables

- Annotated bibliography of 3 sources, which also includes a 100 to 200 word reflection

- Deepfake Image/Video or Misinformation Campaign Plan

- 500-word analysis for a college/university publication or The Atlantic/Medium, reporting on your deep fake ane exploring what you learned about “How are malicious actors and sophists exploiting AI to spread misinformation and sow social discord?”

Recommended Resources

Articles

Homeland Security. (n.d.). Increasing Threat of Deepfakes. https://www.dhs.gov/sites/default/files/publications/increasing_threats_of_deepfake_identities_0.pdf

MLA-CCCC Joint Task Force on Writing and AI. (2024). Overview of the issues, statement of principles, and recommendations. Conference on College Composition and Communication. Retrieved from https://hcommons.org/app/uploads/sites/1003160/2023/07/MLA-CCCC-Joint-Task-Force-on-Writing-and-AI-Working-Paper-1.pdf